Hello Creators!

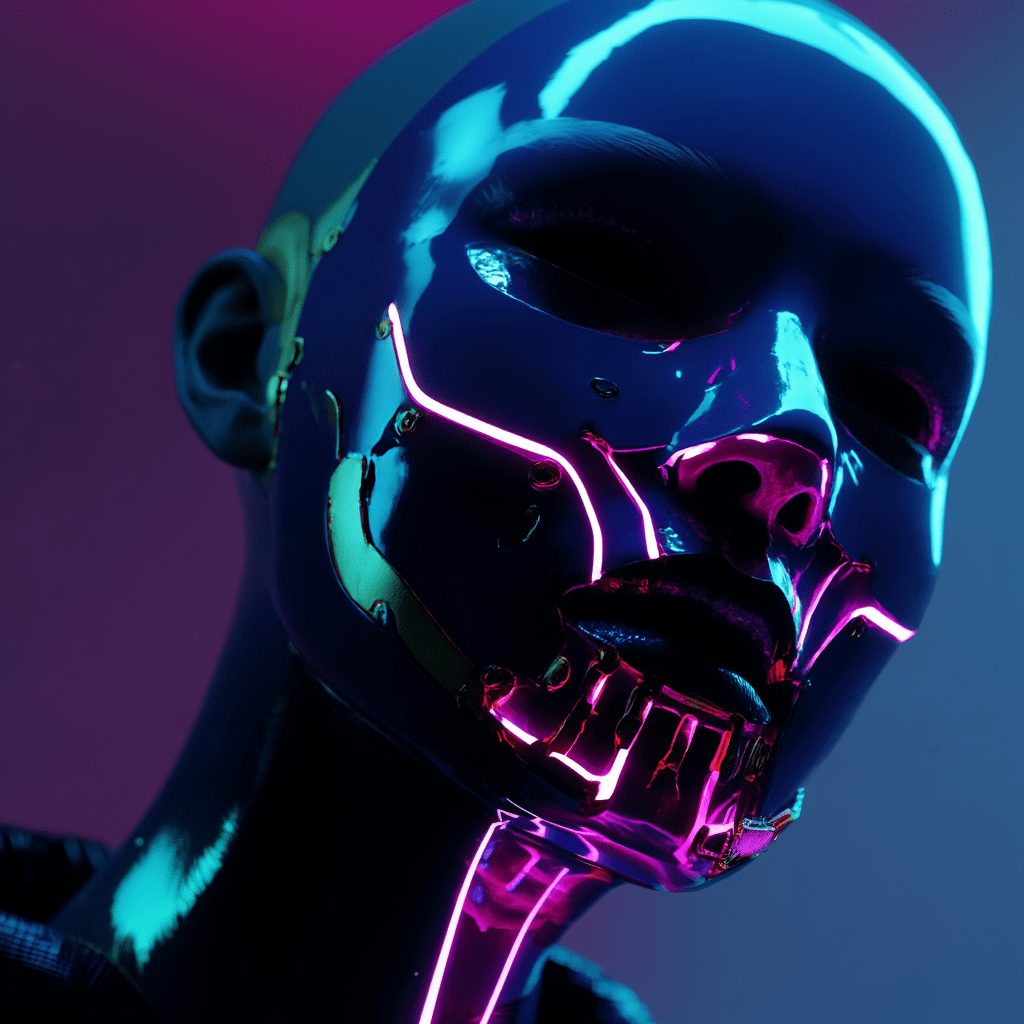

Imagine animating any human face with any motion — no 3D scans, no motion capture suits, just a single image and some video. That’s what DreamActor-M1 just dropped, and yes, it’s as wild as it sounds.

DreamActor-M1 is a new open-source system developed by researchers from the Shanghai AI Laboratory and collaborators. It turns a single portrait into a fully animated actor using any driving video — whether it’s a dance, a dramatic head turn, or just someone blinking naturally. The results? Uncanny. In a good way.

So what makes it special?

Most facial reenactment tools either:

- Rely on 3D models (hello uncanny valley), or

- Struggle with identity consistency when generating motion.

DreamActor-M1 solves both. It uses a modular architecture — decoupling motion and appearance — and combines multiple modules like a face encoder, motion estimator, and a texture-aware warper. The whole system runs fast, produces high-fidelity faces, and keeps the identity intact even in challenging motion sequences (think turning heads or expressive facial gestures).

And here’s the kicker: It doesn’t need your face from different angles. A single image is enough.

What can you use it for?

- AI music videos where one face performs multiple roles.

- Digital doubles in filmmaking.

- Personalized avatars that mimic you in real time.

- Experimental art projects where motion and identity blur.

Oh, and it’s open source, so creators and devs can start playing with it right now.

You can try it on their project page or dive into the GitHub repo to see how it works under the hood.

Our take?

DreamActor-M1 is pushing us closer to real-time, photorealistic digital actors with minimal input. It’s not just another talking head generator — this one can actually act.

We’ll be keeping an eye on this one.

Stay curious,

Kinomoto.Mag